Quite a while ago, I wrote about an application I made using api.ai, a conversational UX platform with a powerful voice recognition engine. Api.ai allows you to control your application using voice recognition, amongst other services it offers. I've been looking at how tech like this can be integrated into IoT applications. In this post, I will discuss an example I recently explored: turning lights on and off, using voice commands.

In my previous post, I showed how simple it is to set up a new application on api.ai. Whereas that post was on recognising faces using voice commands, and this one will be on controlling a light switch, the steps I followed to set up the application are practically the same. I will briefly overview the process here but please refer to the old post for more details.

Agent

I began by creating an Agent, called Lumière, which is the application itself. This allowed me to obtain the keys I needed for authentication when calling the api.ai API.

Entities

How the application will be interacted with by users is described by its Entities. One can either make a statement or a request to control the application. Four entities should be enough to handle this.

- Action Verb (@act): This is an entity that is triggered when the user uses an action verb such as "turn", "toggle", etc. in their command.

- Modal Verb (@modal): This is triggered when the user's command begins with a modal verb such as "could", "would", etc.

- On (@on): A simple one- or two-word command to switch lights on.

- Off (@off): A simple one- or two-word command to switch lights off.

Intents

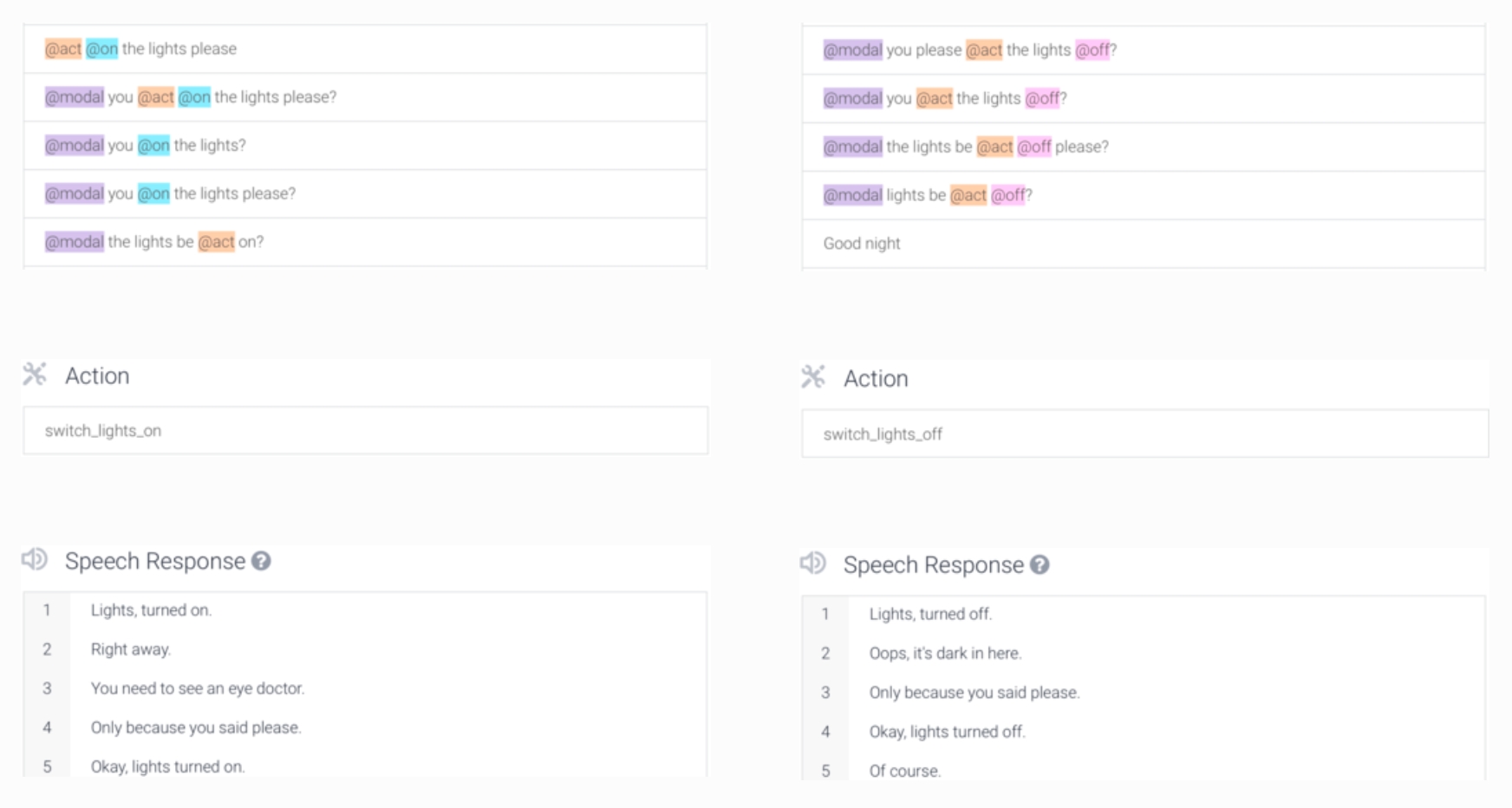

Intents describe what actions the application performs. Since the goal here is to simply switch a light bulb/LED on or off, all we need are two intents, ON and OFF. After creating these intents, we can then go into details about how and when these actions are triggered. For each intent, we must define three things (examples shown in brackets):

- What the user says (@act lights @on please).

- Action to be taken (switch_lighs_on).

- Speech response (Only because you said please).

In the example above, @act represents an action verb. For instance, the user might have said "Toggle lights on please". Because I have a preset instance for a command such as this, the 'intent' of the user is interpreted as a request to turn the lights on. Therefore the correct action, switch_lights_on is taken (returned when the API is called with such a command), and an appropriate response is given, "Only because you said please", in this case. You can add as many instances, actions and responses as you like. Api.ai offers you full flexibility to design your application as desired, making it fit for purpose. The images below show the user inputs, actions and speech responses for the ON and OFF intents.

User interface

With our application set up on api.ai, we now need to create a program that will allow us to utilise this application. You can integrate your api.ai system into a desktop/mobile app, wearable device, robot, etc. I created a Mathematica-based user interface to play around with mine. I did this because, within Mathematica, I can easily explore other possibilities with my api.ai application. I will go through what I consider to be the most important facets of this user interface.

Record command

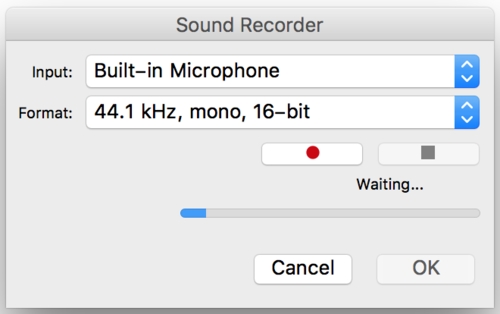

To use our newly created voice recognition app, we must be able to send requests to, and receive responses from it. Requests to api.ai are sent in the form of voice binary data. This means that a user's voice command is digitised and converted into a format that api.ai can understand. Mathematica allows you to do this quite easily with its built-in sound recorder.

rec = SystemDialogInput["RecordSound"]

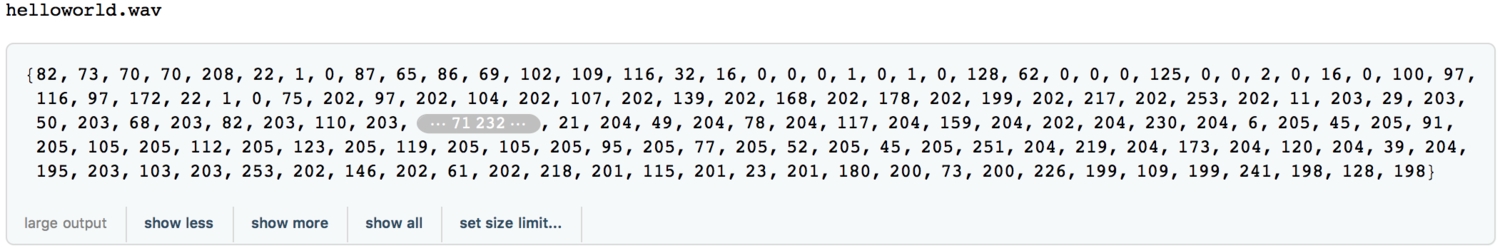

The image on the centre above shows the interface for recording sound whilst that on the left shows a playable recording of "hello world". More details are shown here. Api.ai only accepts sound recordings in the .WAV format and with a frequency of 16 kHz. However, Mathematica records sound at 44.1 kHz and in .MP3. So we will downsample our recording to 16 kHz and then save it in .WAV format before converting it to binary form.

1file = Export["helloworld.wav",2 Sound[SampledSoundList[Downsample[rec[[1, 1]], 3], 16000]]]3ImportString[ExportString[Import[file], "WAV"], "Binary"]

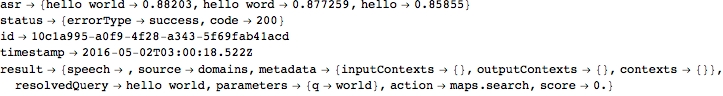

Send api.ai request

Now that we have our voice command in the format requested by api.ai, we can use our application by sending the binary form of our command to it. The returned result shows that api.ai correctly interpreted our command as api.ai. We did not create any intent or entity for this kind of command so we do not get any response back.

1(* send request *)2URLFetch["https://api.api.ai/v1/query",3 "Method" -> "POST",4 "Headers" -> {"Authorization" -> "Bearer a2*your bearer code here*", "ocp-apim-subscription-key" -> "bc*your subscription key here*"},5 "MultipartData" -> {{"request", "application/json", ToCharacterCode[{'lang':'en','timezone':'GMT'}", "UTF8"]}, {"voiceData", "audio/wav", binarydata}}6 ];7(* format result *)8Column@ImportString[%, "JSON"]

This demonstrates the fundamental workings of our app. We just need to create a nice interface for it.

The interface

The interface can of course be controlled with or without the voice functionality. This functionality can, however, be activated by checking the Enable Voice Control box. Voice recognition can also be cancelled.

Future developments

And that pretty much concludes this post - api.ai works well and allows you to design cool voice recognition apps. It is not perfect though, as you'd expect. It sometimes gets the commands wrong, but it is constantly learning and improving. I would like to extend this to do more in the future. An example of added functionality could be to set the level of brightness or choose what colour of light or request that the lights come on/go off at a certain time. It'd be more interesting to run the application on a Raspberry Pi/Arduino connected to an LED! The possibilities are endless with voice recognition, you just have to imagine what could be.

— MS